Docker Registry in-cluster kaniko cache

Speed up your kaniko builds using an in-cluster kaniko cache.

Caching the image layers when building with kaniko can significantly enhance the speed of your builds. When your workspace isn't persisted during your builds then local caching isn't an option. In this blog post we'll take a look at running our self-hosted Github Actions Runner for building a docker image using kaniko configured to cache layers in a Docker Registry deployed alongside our installation.

Note: in our example we'll enable persistence for the cached layers however in production it may be preferred to not persist the cache.

Bootstrap Kubernetes

We can use kind for bootstrapping a local Kubernetes cluster: kind create cluster

Install nfs-server-provisioner

We use the nfs-server-provisioner from the charts/stable for persisting the data:

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm upgrade --install nfs-server \

--set persistence.enabled=true \

--set persistence.size=10Gi \

--set persistence.storageClass=standard \

--set storageClass.defaultClass=true \

--set storageClass.name=nfs-client \

--set storageClass.mountOptions[0]="vers=4" \

stable/nfs-server-provisioner \

--wait

Install the Docker Registry

The Docker Registry Helm Chart is also available in the charts/stable repository:

helm install registry \

--set persistence.enabled=true \

--set persistence.size=4Gi \

--set persistence.storageClass=nfs-client \

--set persistence.deleteEnabled=true \

stable/docker-registry

Install the GitHub Actions Runner

The Github Actions Runner Helm Chart is hosted in our hosted ChartMuseum. Install the actions-runner with the dind dependency enabled and configured with the insecure Docker Registry:

helm repo add lazybit https://chartmuseum.lazybit.ch

helm upgrade --install actions-runner \

--set global.storageClass=nfs-client \

--set github.username=${GITHUB_USERNAME} \

--set github.password=${GITHUB_TOKEN} \

--set github.owner=${GITHUB_OWNER} \

--set github.repository=${GITHUB_REPOSITORY} \

--set persistence.enabled=true \

--set persistence.certs.existingClaim=certs-actions-runner-dind-0 \

--set persistence.workspace.existingClaim=workspace-actions-runner-dind-0 \

--set dind.kaniko=true \

--set dind.insecureRegistries[0]="registry-docker-registry:5000" \

--set dind.persistence.enabled=true \

--set dind.persistence.certs.accessModes[0]=ReadWriteMany \

--set dind.persistence.certs.size=128Mi \

--set dind.persistence.workspace.accessModes[0]=ReadWriteMany \

--set dind.persistence.workspace.size=4Gi \

--set dind.resources.requests.memory="1Gi" \

--set dind.resources.requests.cpu="1" \

--set dind.resources.limits.memory="2Gi" \

--set dind.resources.limits.cpu="2" \

--set dind.livenessProbe.enabled=false \

--set dind.readinessProbe.enabled=false \

lazybit/actions-runner \

--wait

Using kaniko in your workflow

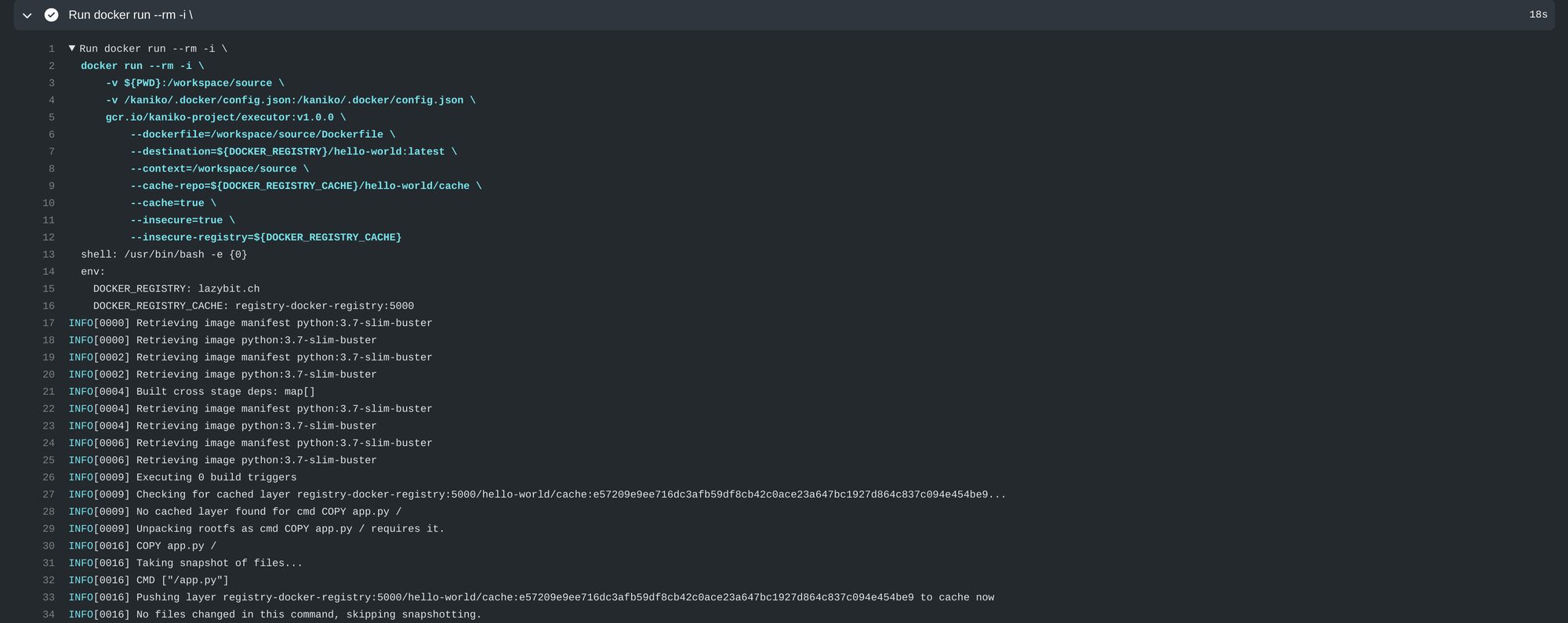

Push the cache layers to our in-cluster Docker Registry and release the tagged image to our Docker Hub repository:

...

env:

DOCKER_REGISTRY: "lazybit"

DOCKER_REGISTRY_CACHE: "registry-docker-registry:5000"

jobs:

kaniko:

name: kaniko

needs: lint

runs-on: self-hosted

steps:

- run: |

docker run --rm -i \

-v ${PWD}:/workspace/source \

-v /kaniko/.docker/config.json:/kaniko/.docker/config.json \

gcr.io/kaniko-project/executor:v1.0.0 \

--dockerfile=/workspace/source/Dockerfile \

--destination=${DOCKER_REGISTRY}/hello-world:latest \

--context=/workspace/source \

--cache-repo=${DOCKER_REGISTRY_CACHE}/hello-world/cache \

--cache=true \

--insecure=true \

--insecure-registry=${DOCKER_REGISTRY_CACHE}