GitHub Actions Runners self-hosted in Kubernetes

"You get a Github Actions Runner, and you get a Github Actions Runner, everybody gets a Github Actions Runner".

Have you been looking for self-hosted GitHub Actions Runners for Kubernetes? Look no further! We've got you covered with our public Helm Charts to bootstrap your cluster \o/

In this blog post we'll take a look at a couple of deployment options for installing an actions-runner and optional dind (Docker in Docker) service inside a local kind cluster bootstrapped with nfs-server-provisioner.

Kubernetes in Docker

We can bootstrap a local cluster with two workers and the contol plane using kind: kind create cluster

Kubernetes Secrets

The actions-runner and dind images are released to Docker Hub.

We'll need to create an Opaque Secret from the docker config.json that will be bind mounted into the actions-runner container for pushing images to our private registry: kubectl create secret generic docker --from-file=${HOME}/.docker/config.json

Tip: with the secrects mounted in the pod it is not necessary to use the docker login actions in the jobs.<job_id>.steps[*].run.

Note: In this example we pull the public images in the

helminstallation from Docker Hub and push our builtdockerimages to a different private Docker Registry.

Persistent Volumes

When the actions-runner is installed with the dind dependency the workspace and certs directories are shared between pods using a persistent NFS volume. The action-runner's environment is set to connect to the remote docker daemon.

Tip: Beware that when working with a remote docker daemon the path specified when mounting a docker volume must be the remote path, not the local path. The remote docker daemon cannot access the local file system.

Note: Sharing the

workspacebinds the deployment to a same namespace but enables bind-mounting files indocker run's using a same path on the local and remote filesystems, for example:docker run --rm -i -v ${{ github.workspace }}:/workspace/source -w /workspace/source --entrypoint find alpine:3 .works as expected in ajob.<job_id>.steps[*].run.

Network File System

Starting in 18.09+, the dind variants of the docker image will automatically generate TLS certificates. For advanced installations we install a nfs-server-provisioner in our cluster to share the client certificates and workspace between the dind and actions-runner StatefulSets across our nodes.

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm repo update

helm upgrade --install nfs-server \

--set persistence.enabled=true \

--set persistence.size=10Gi \

--set persistence.storageClass=standard \

--set storageClass.defaultClass=true \

--set storageClass.name=nfs-client \

--set storageClass.mountOptions[0]="vers=4" \

stable/nfs-server-provisioner \

--wait

Note:

kindsupports NFS volumes sincev0.9.0.

GitHub Actions Runner

Let's take a look at a few different ways that we could deploy the charts depending on what the requirements are.

The actions-runner and dind Helm Charts are currently in our ChartMuseum:

helm repo add lazybit https://chartmuseum.lazybit.ch

helm repo update

Building without docker

Maybe you don't need access to the docker client for building your artifacts. You can deploy the actions-runner as standalone configured with your repository:

helm upgrade --install actions-runner \

--set global.image.pullSecrets[0]=docker-0 \

--set github.username=${GITHUB_USERNAME} \

--set github.password=${GITHUB_TOKEN} \

--set github.owner=${GITHUB_OWNER} \

--set github.repository=${GITHUB_REPOSITORY} \

--set dind.enabled=false \

lazybit/actions-runner \

--wait

The action-runner container is based on ubuntu:20.04, anything installed in the runners environment will not be persisted if the container crashes.

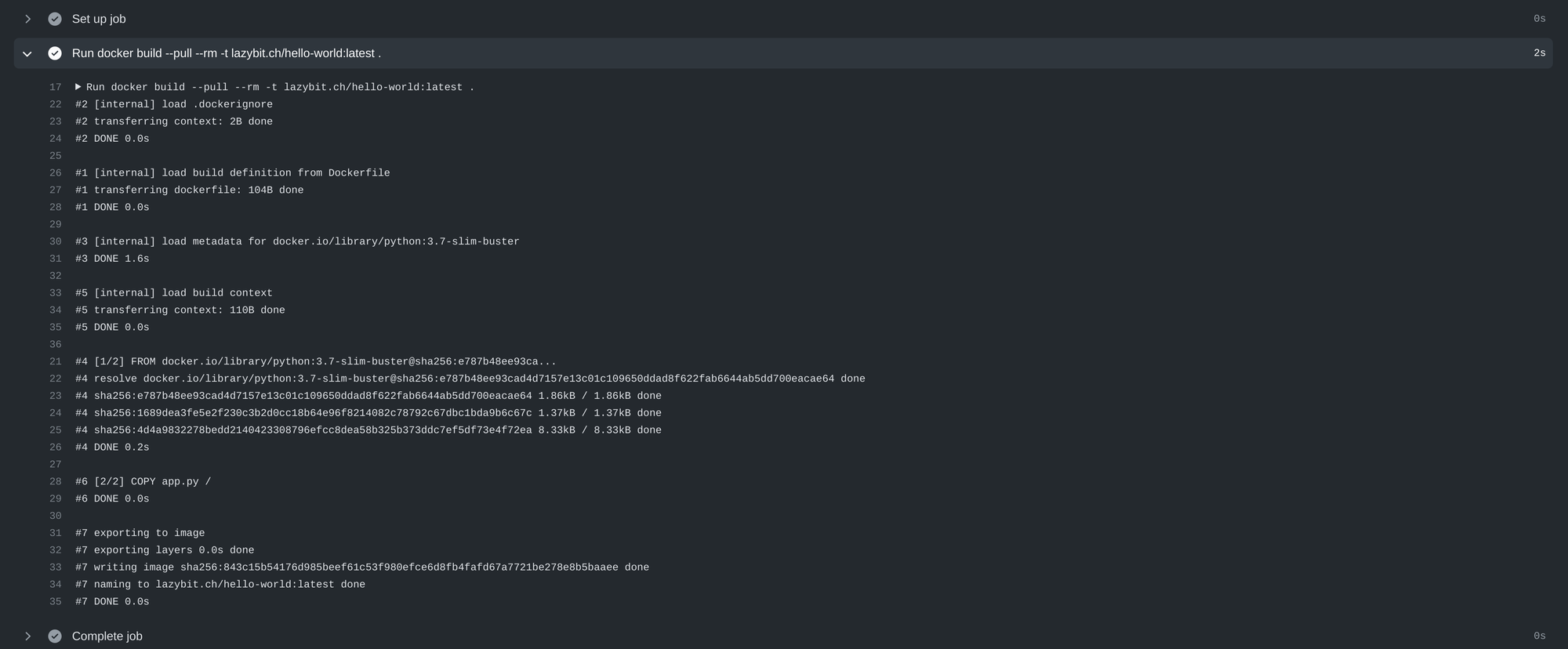

Building with docker

Let's enable the dind dependency to give access to a docker daemon for running docker images in our workflow steps. Set dind.dockerSecretName=my-docker-secret to specify an alternative Opaque Secret name to mount for docker.:

helm upgrade --install actions-runner \

--set global.storageClass=nfs-client \

--set github.username=${GITHUB_USERNAME} \

--set github.password=${GITHUB_TOKEN} \

--set github.owner=${GITHUB_OWNER} \

--set github.repository=${GITHUB_REPOSITORY} \

--set docker=true \

--set rbac.create=true \

--set persistence.enabled=true \

--set persistence.certs.existingClaim=certs-actions-runner-dind-0 \

--set persistence.workspace.existingClaim=workspace-actions-runner-dind-0 \

--set dind.experimental=true \

--set dind.debug=true \

--set dind.metrics.enabled=false \

--set dind.persistence.enabled=true \

--set dind.persistence.certs.accessModes[0]=ReadWriteMany \

--set dind.persistence.certs.size=1Gi \

--set dind.persistence.workspace.accessModes[0]=ReadWriteMany \

--set dind.persistence.workspace.size=8Gi \

--set dind.resources.requests.memory="1Gi" \

--set dind.resources.requests.cpu="1" \

--set dind.resources.limits.memory="2Gi" \

--set dind.resources.limits.cpu="2" \

--set dind.livenessProbe.enabled=false \

--set dind.readinessProbe.enabled=false \

lazybit/actions-runner \

--wait

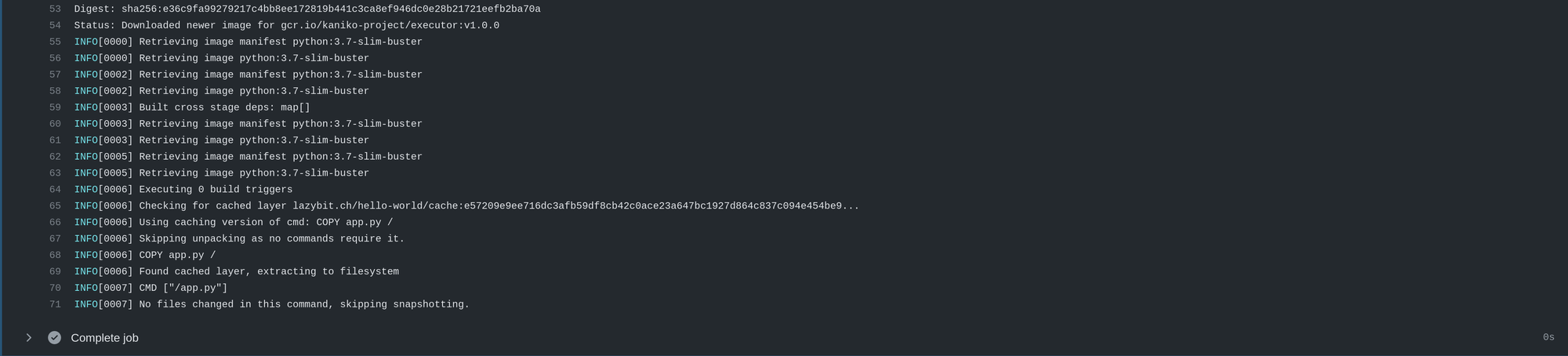

Building with kaniko in docker

kaniko expects the config.json to be mounted at /kaniko/.docker, setting dind.kaniko=true in the deployment will mount the Opaque Secret (named by default docker) inside the dind container at /kaniko/.docker/config.json. Set dind.kanikoSecretName=my-docker-secret to specify an alternative Opaque Secret name to mount for kaniko.

The actions-runner can bind-mount the remote config.json in a docker run during the execution of a step in the job:

env:

DOCKER_REGISTRY: lazybit.ch

jobs:

build:

name: build

runs-on: self-hosted

steps:

- run: |

docker run --rm -i \

-v ${PWD}:/workspace/source \

-v /kaniko/.docker/config.json:/kaniko/.docker/config.json \

gcr.io/kaniko-project/executor:v1.0.0 \

--dockerfile=/workspace/source/Dockerfile \

--destination=${DOCKER_REGISTRY}/hello-world:latest \

--context=/workspace/source \

--cache-repo=${DOCKER_REGISTRY}/hello-world/cache \

--cache=true

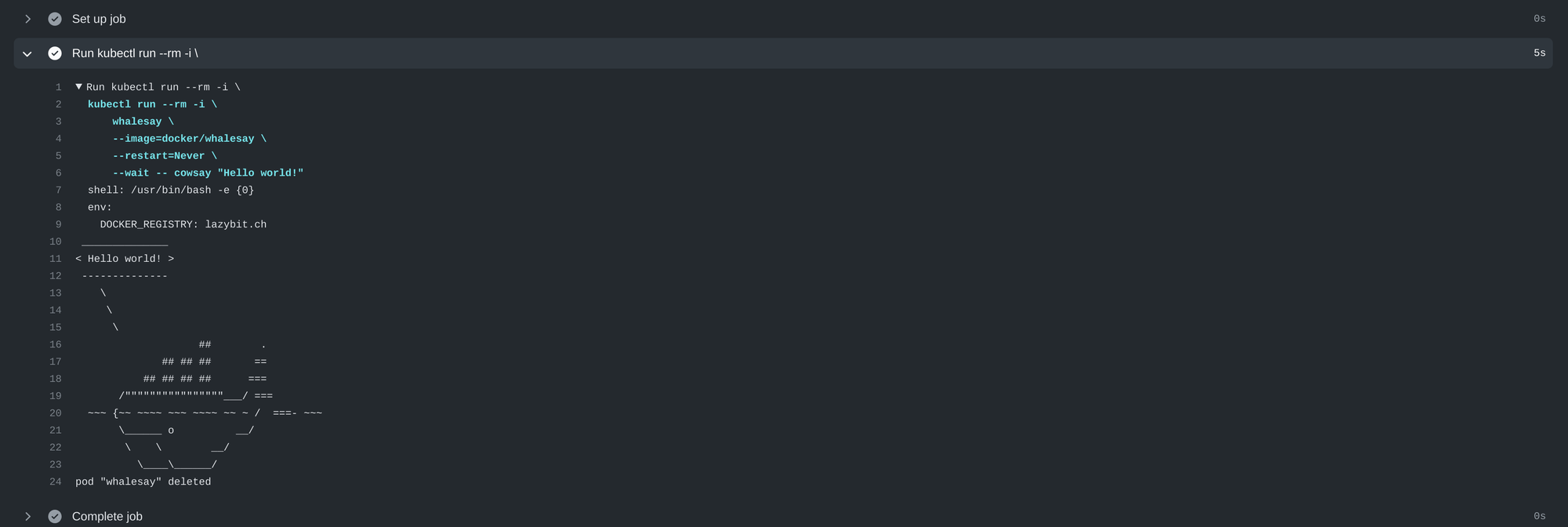

Running kubectl

Enabling rbac in the installation (--set rbac.create=true) will grant get, watch, list, create and delete on the pods and pods/logs resources. You can use kubectl in the jobs.<job_id>.steps[*].run to run Kubernetes pods during the build:

jobs:

cowsay:

name: cowsay

runs-on: self-hosted

steps:

- run: |

kubectl run --rm -i \

whalesay \

--image=docker/whalesay \

--restart=Never \

--wait -- cowsay "Hello World"